Amid the devastation of the Los Angeles County wildfires – scorching an area twice the size of Manhattan – McAfee threat researchers have identified and verified a rise in AI-generated deepfakes and misinformation, including startling but false images of the Hollywood sign engulfed in flames.

Debunking the Myth: Hollywood Sign Safe Amid Wildfire Rumors on Social Media

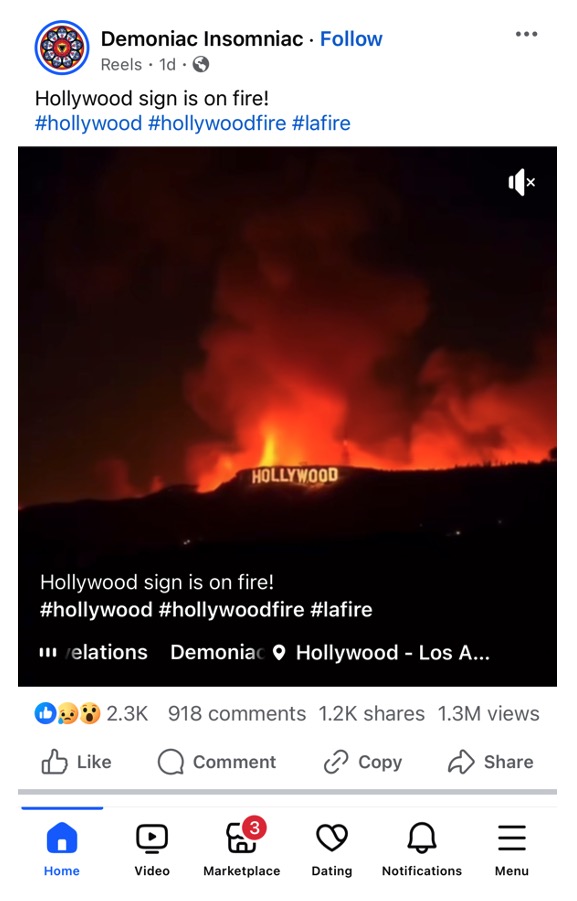

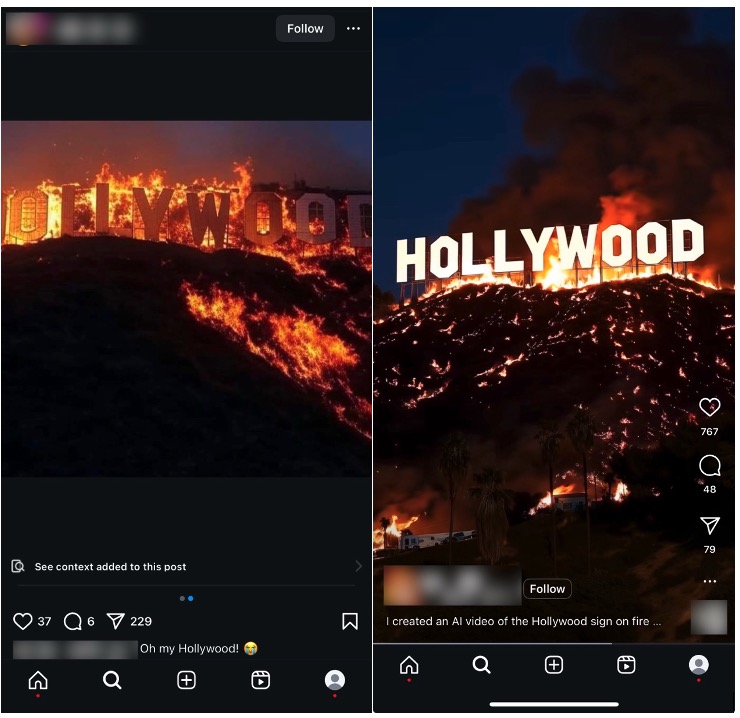

Social media and local broadcast news have been flooded with deceptive images claiming the Hollywood sign is engulfed in flames, with many people alleging that the iconic landmark is “surrounded by fire.”

Figure 1. AI-generated image shared on Facebook on January 9th, 2025.

Fact check: The Hollywood sign is still standing and is intact. A live feed of the Hollywood sign clearly shows the sign is not currently in harm’s way or engulfed in flames.

Figure 2: Live view of the Hollywood sign taken at 3.29 PT on Friday, January 10th 2025.

McAfee researchers have examined dozens of images shared across X, Facebook, Tik Tok and Instagram, and have verified these are indeed AI-generated images and videos. In addition to analysis from our own threat researchers, McAfee’s image deepfake detection technology has flagged images shown here (and many more) of the Hollywood Hills as AI-generated, with the fire serving as a key factor in its analysis.

McAfee’s investigation traced many of the images back to Gemini, an AI-based image generation platform. This finding underscores the increasing sophistication of fake image synthesis, where fake images and videos can be created in mere seconds, but can be spread to more than a million views in just 24 hours, such as is the case with the social post shared on Facebook below.

Figure 3: Screenshot of deepfake video of Hollywood sign on fire. This video was discovered on Facebook and had already achieved 1.3 million views in 24 hours.

McAfee CTO, Steve Grobman states, “AI tools have supercharged the spread of disinformation and misinformation, enabling false content—like recent fake images of the Hollywood sign engulfed in flames—to circulate at unprecedented speed. This makes it critical for social media users to keep their guard up, approach viral posts with skepticism, and verify sources to distinguish fact from fiction.”

Figure 4. McAfee’s advanced AI models identifies images that have been modified or created using AI. The heatmap depicts areas that have been used to identify and confirm AI-usage.

When Social Media Fans the Flames of Misinformation

AI-generated still images are incredibly easy to produce. In less than a minute, we were able to produce a convincing image of the Hollywood Hills sign on fire for free with AI image generating Android app (we have not published these images, only those found on social media). Many of these apps exist to choose from. Some do filter for violent and other objectionable content. However, images like the Hollywood Hills sign on fire, fall outside of normal guardrails. Additionally, the business model of many of these apps include free credits as a trial, making it quick and easy to create and share. AI image generation is a widely available and easily accessible tool used in many misinformation campaigns.

See below for more examples:

Figure 5. Examples on Instagram.

Upon closer inspection, some images had watermark images clearly labeled from Generative AI tools such as Grok. And while this might be an obvious telltale sign for some people, there are many others who are not familiar with or recognize such watermarks.

Figure 6. The Grok watermark is clearly visible in the image above.

How to Identify a Deepfake

There are several straightforward steps that you can take to spot a fake. We recommend a combination of healthy skepticism and awareness combined with the right technology, such as McAfee Deepfake Detector.

While not all AI is malicious or ‘bad’, this technology is commonly used by bad actors for malicious intent when it comes to deepfake scams, misinformation and disinformation. While the deepfakes outlined here appear to be without malicious intent – other than to misinform social media users – we could expect these to evolve where scammers create similar deepfakes as part of fake donation scams, and so we advise everyone to stay vigilant and learn more on how to spot deepfakes online:

- Consider who did the posting. Verify who posted the content. If it’s a friend, did they repost it? Who was the original poster? Could it be a bot or a bogus account? How long has the account been active? What kind of other posts have popped up on it? If an organization posted it, look it up online. Does it seem reputable? This bit of detective work might not provide a definitive answer, but it can let you know if something seems fishy.

- Seek another source. Whether they aim to spread disinformation, commit fraud, or rile up emotions, malicious deepfakes try to pass themselves off as legitimate. Consider a video clip that looks like it got recorded at a press conference. The figure behind the podium says some outrageous things. Did that really happen? Consult other established and respected sources. If they’re not reporting on it, you’re likely dealing with a deepfake.

- Zoom in. A close look at deepfake photos or videos often reveals inconsistencies and flat-out oddities. This could come in the form of six fingers on one hand, or perhaps the skin looks too smooth or there’s something strange with the smile – these are all telltale signs.

- Practice healthy skepticism. Always: With AI tools improving so quickly, we can no longer take things at face value. Malicious deepfakes look to deceive, defraud, and disinform. And the people who create them hope you’ll consume their content in one, unthinking gulp. Scrutiny is key today. Fact-checking a must, particularly as deepfakes look sharper and sharper as the technology evolves.

Plenty of deepfakes can lure you into sketchy corners of the internet. Places where malware and phishing sites take root. Consider using comprehensive online protection software with McAfee+ and McAfee Deepfake Detector to keep safe. In addition to several features that protect your devices, privacy, and identity, they can warn you of unsafe sites too.